The Power of Retrieval Enhanced Transformers (RETRO)

In recent years, autoregressive language modeling has seen significant performance improvements by increasing the parameters in Transformer models. This has resulted in the creation of dense “Large Language Models” (LLMs) with over 100 billion parameters. Alongside this, massive datasets containing trillions of words have been gathered to train these LLMs.

Introducing RETRO: Retrieval Enhanced Transformers

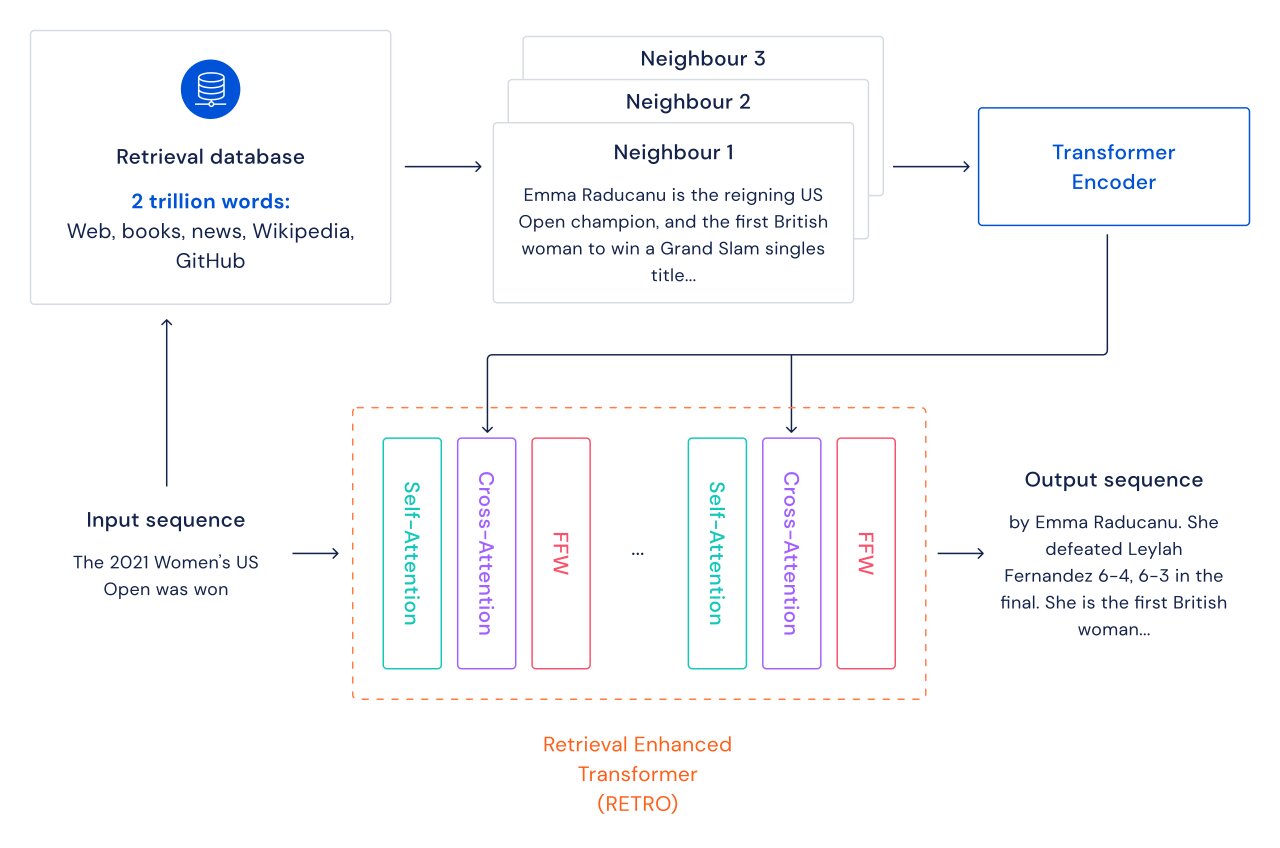

At RETRO, we have taken a different approach to enhance language models. Instead of solely relying on model and data size, we have augmented transformers with retrieval over a database of text passages, including web pages, books, news, and code. This method, which we call RETRO, has proven to be highly effective.

Traditional transformer language models are limited by the size of both the model and the training dataset. However, with RETRO, the model is not constrained by the training data it has seen. By utilizing a retrieval mechanism, the model has access to the entire training dataset. This has resulted in significant performance gains compared to a standard Transformer with the same number of parameters.

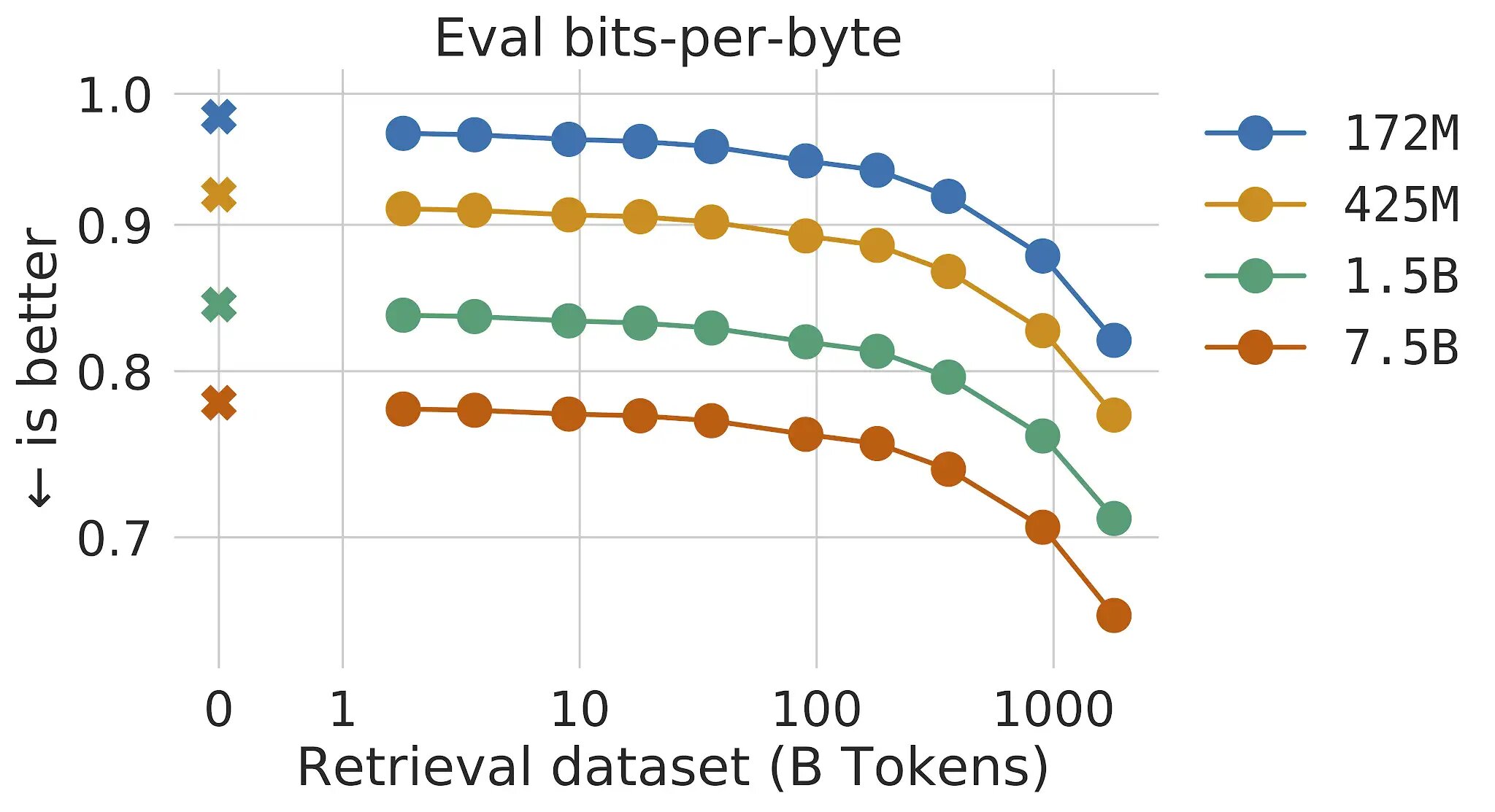

We have discovered that language modeling improves continuously as we increase the size of the retrieval database. In fact, our experiments have shown that performance gains persist up to 2 trillion tokens, which is equivalent to 175 lifetimes of continuous reading.

Key Features of RETRO:

- Nearest-Neighbor Search: For each text passage, we perform a nearest-neighbor search to find similar sequences in the training database.

- Factual Continuation: The retrieved sequences help predict the continuation of the input text, resulting in more accurate and factual continuations.

- Interleaved Attention: RETRO combines regular self-attention at a document level with cross-attention to the retrieved neighbors at a finer passage level.

- Enhanced Interpretability: RETRO’s architecture makes model predictions more interpretable and allows for direct interventions through the retrieval database to enhance the safety of text continuation.

In our experiments on the Pile, a standard language modeling benchmark, a 7.5 billion parameter RETRO model outperforms the 175 billion parameter Jurassic-1 on 10 out of 16 datasets and surpasses the 280B Gopher on 9 out of 16 datasets.

Sample Results with RETRO

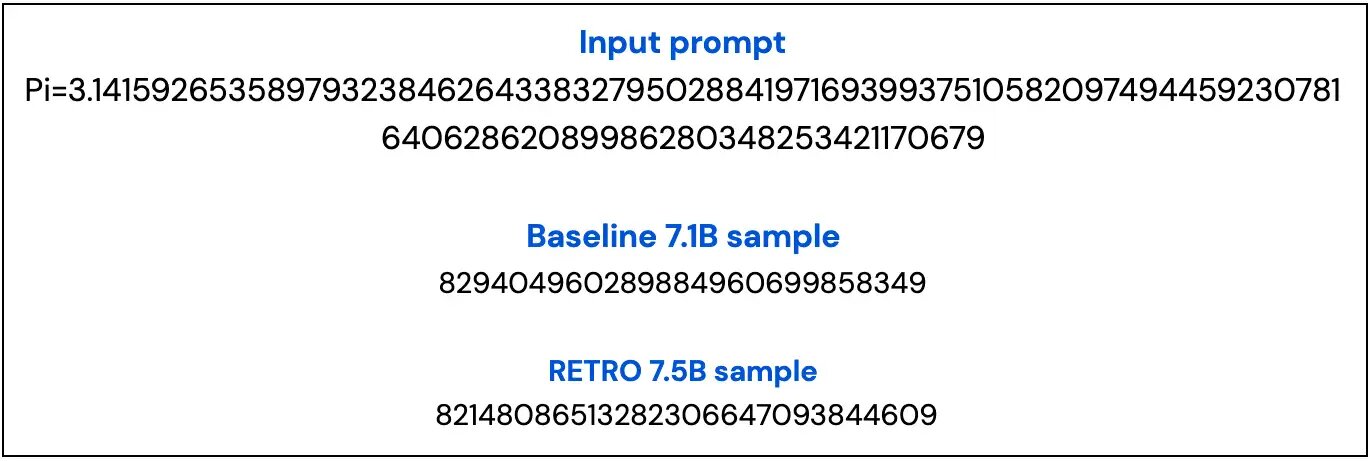

Figure 3: The baseline model generates only 2 correct digits. In contrast, with RETRO, the correct digits are generated after retrieval from the database.

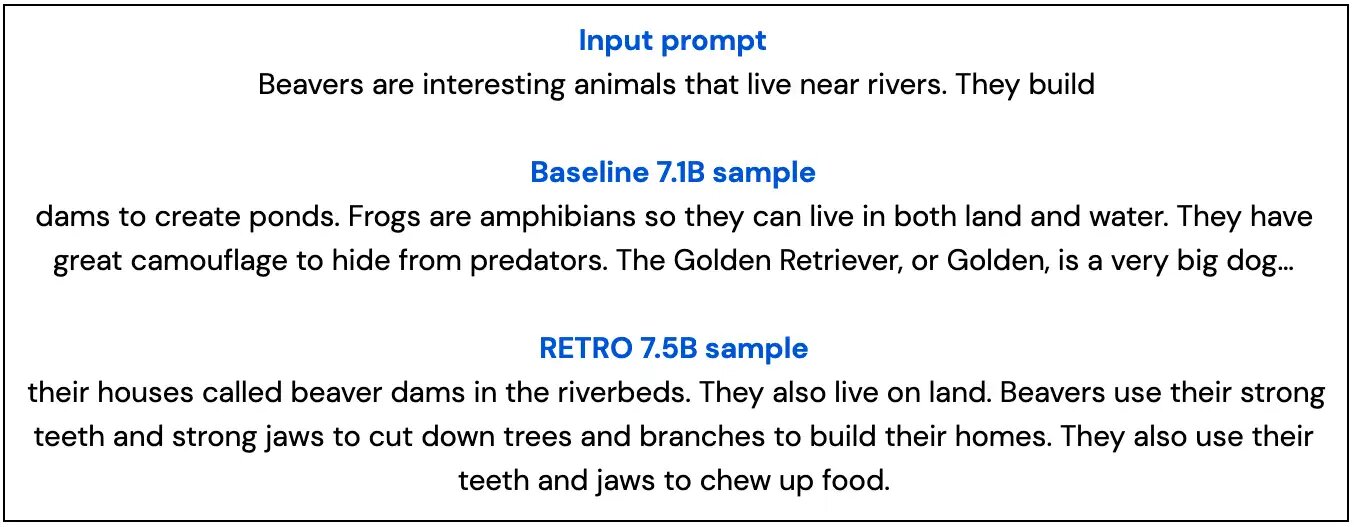

Figure 4: The RETRO model maintains better topic relevance compared to the baseline sample.

Overall, RETRO’s integration of retrieval mechanisms with transformer models offers exciting possibilities for improving language modeling performance. By expanding the model’s access to a vast retrieval database, we have achieved remarkable results in accuracy, factual continuity, and topic relevance. RETRO represents a significant step forward in the development of powerful and interpretable language models.